AI systems are deeply interwoven into the fabric of modern life. Using an iPhone means unknowingly using some form of verifiable Machine Learning. Lifting an iPhone to your face and unlocking it with Face ID seamlessly means engaging multiple machine learning algorithms—convolutional neural networks for face detection, landmark detection algorithms for face alignment, deep learning models for feature representation. This ubiquitous moment is a prime example of verifiable machine learning in action and is one that touches millions of lives daily.

However, Face ID’s verification process is confined to Apple’s hardware, limiting the models that can be run securely and privately. EZKL aims to extend these affordances and make them arbitrarily programmable. The goal is to enable developers to run almost any machine learning model on any device privately and securely. In other words, EZKL democratizes the technology that keeps Face ID secure.

Before we get into technical details, let’s examine the mechanics of Face ID.

Face ID: Verification in Your Pocket

Unlocking your iPhone with Face ID triggers a sophisticated verification system. Your phone isn’t just running a facial recognition algorithm—it’s running that algorithm inside what’s called a Trusted Execution Environment, or TEE.

Apple’s TEE (which they call the Secure Enclave) is essentially a separate computer within your phone. It has its own processor, memory, and operating system (OS), all isolated from the main system and even if your phone’s main OS is compromised, the TEE remains secure.

Here’s what happens during Face ID verification:

- The TrueDepth camera system captures a detailed 3D map of your face using device-specific query points

- This data is immediately sent to the Secure Enclave

- Inside this protected environment, a neural network compares this map against your enrolled facial data, which is encrypted and protected with a key available only to the Secure Enclave.

- The TEE then provides a simple yes/no answer to the main system: “Is this the authorized user?”

This system is rather elegant: facial data never leaves the Secure Enclave and the main OS receives only the verification result, not the sensitive biometric information itself.

But … this verification doesn’t stop with just unlocking your phone: other applications can leverage this verified identity without ever accessing your facial data. This creates a powerful verification ecosystem where third-party developers can build high-security applications that rely on biometric verification without needing to implement their complex verification systems or handle sensitive data, which can actually be MEGA pricey. The banking app doesn’t need to know how to process facial recognition—it just needs to trust Apple’s verification that you are who you claim to be.

This consumption of verification represents a significant change in how data is managed: complex, sensitive computation happening in a secure environment, with only the verified result being shared with applications that need it. The verification is both highly secure and highly usable, representing an elegant balance that most security systems struggle to achieve. One core insight here is that verification often also gives users privacy because the data and computation on it is never seen by applications that consume the verified result.

So the tldr for verifiable ML is this:

Applications don’t need to handle sensitive user data at all, but they get a strong guarantee that the user ran a model as intended (eg. a face recognition model for identity): opsec and security improve. Conversely users get privacy by default because their data never leaves their device.

The Limitations of Apple’s Approach

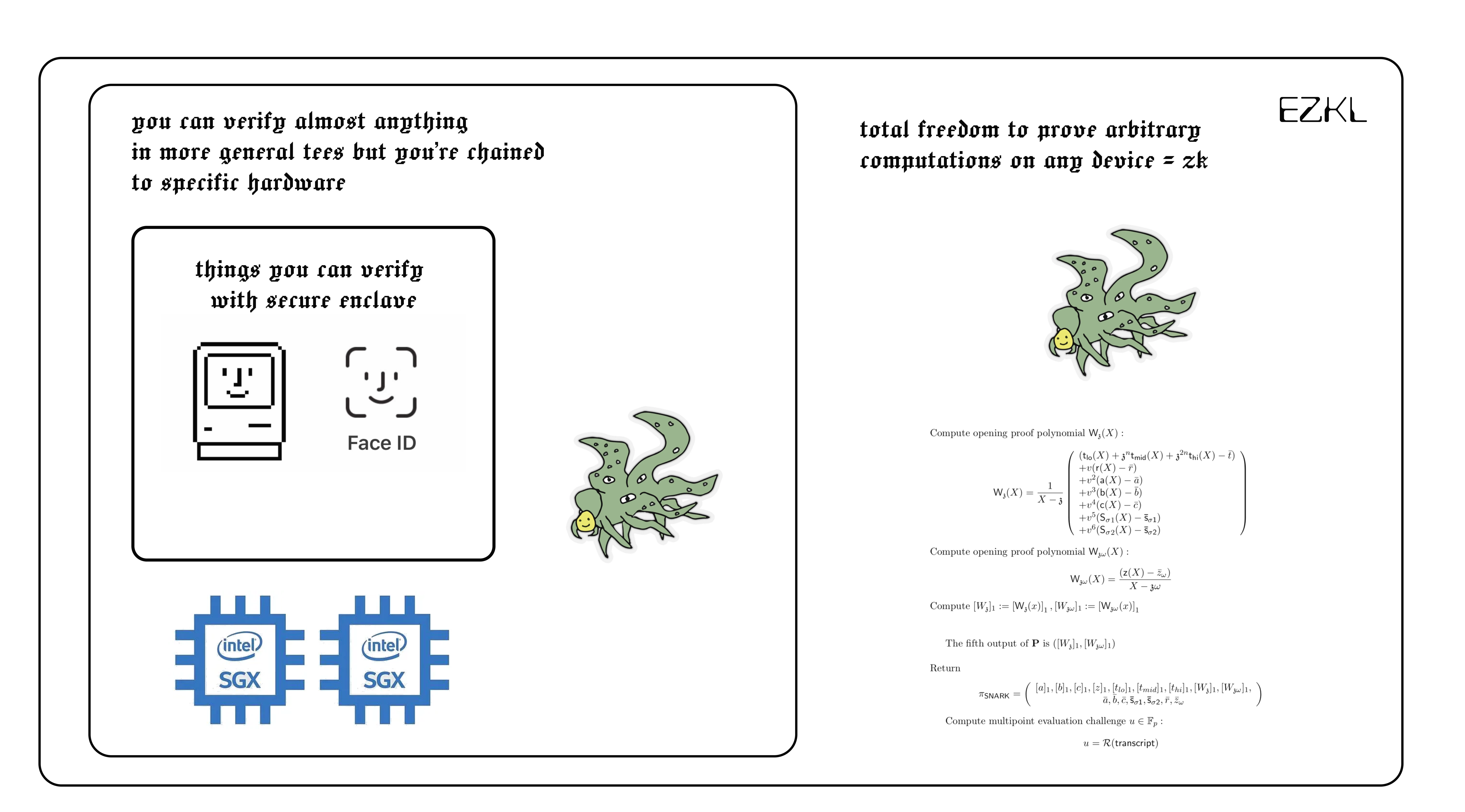

While Apple’s TEE represents an impressive implementation of verified machine learning, it comes with important limitations. The Secure Enclave is purposely restricted in what it can do.

For security reasons, Apple’s TEE isn’t arbitrarily programmable. You can’t simply load any ML model into it that you wish. Instead, it runs a limited set of pre-approved models focused on specific tasks like facial recognition, fingerprint verification, and secure payment processing.

This restriction creates a trade-off. On one hand, it enhances security by limiting the potential attack surface. On the other hand, it means the verification capabilities are limited to what Apple has implemented. If you wanted to verify a different kind of ML model—perhaps one that analyzes health data or performs some specialized analysis—you couldn’t leverage the Secure Enclave for that purpose.

Programmable TEEs: Expanding Possibilities with Hardware Constraints

Beyond Apple’s approach, other Trusted Execution Environments offer more flexibility while still maintaining strong security guarantees. Intel’s Software Guard Extensions (SGX) and AMD’s Secure Encrypted Virtualization (SEV) are prominent examples.

These TEEs allow developers to create what are called “enclaves”—secure execution environments where sensitive code can run protected from the rest of the system. Unlike Apple’s Secure Enclave, these environments are programmable, meaning developers can deploy custom ML models inside them.

For example, a hospital might use SGX to create an enclave that runs a machine learning model analyzing patient data. The model operates on sensitive information within the protected environment, and only outputs aggregated results or specific insights that don’t compromise patient privacy.

However, these solutions still face an important limitation: they’re chained to specific hardware. Your verification capability is tied to particular processors from particular manufacturers. This creates several challenges:

- Hardware dependencies limit deployment options

- You’re reliant on the hardware manufacturer’s security practices

- If vulnerabilities are discovered in the hardware (as has happened with SGX), your security guarantees may be compromised and you may need to swap out hardware entirely.

- Scaling can become expensive as it requires specific physical hardware and may not work on mobile (eg. Apple’s secure enclave which is not programmable).

It’s like having a more flexible vault, but one that can only be installed in certain buildings and might occasionally need to be replaced if security flaws are discovered and they get discovered a lot.

Zero-Knowledge: Verification Beyond Hardware Constraints

This is where zero-knowledge proofs (ZKPs) enter the picture, representing a fundamental shift in how we can verify machine learning models and statistical computations. By leveraging relatively recent leaps within cryptography we can now prove to one party (the verifier) that the other party (called ’the prover’) has provided a statistically indisputable truthful statement/computation with minimal or no trust between the parties.

When applied to machine learning, they enable something remarkable: the ability to verify that a model was run end to end and wasn’t tampered with, without needing to re-run the model or see its internal workings.

Here’s why this matters for verifiable ML:

- Hardware Independence: Unlike TEEs, ZK systems don’t require specialized hardware to generate a proof and the proof generation and verification can happen on any device.

- Model Flexibility: Instead of being limited to a few pre-approved models, modern ZK provers can generate proofs for virtually any computation, including arbitrary ML models and statistical analyses.

- Public Verifiability: There can be many consumers of the proof, not just those with access to specific hardware (like in the case of an iOS app). You can share it over the web, with your friends and parents, all running different devices and they can all potentially verify the proof.

- Scalability: The verification process is typically much less computationally intensive than re-running the model itself.

Let’s consider a concrete example: a research institution has developed a complex ML model that analyzes medical images to detect early signs of disease. Using zero-knowledge, they could:

- Run the model on sensitive patient data

- Generate a cryptographic proof that the model was executed correctly

- Allow doctors, regulators, or patients to verify this proof without seeing the private data or needing to re-run the expensive computation

This expands the scope of verification from just a handful of models running in TEEs to potentially any ML model or statistical analysis, while maintaining strong privacy and security guarantees.

With the secure enclave you can verify computations that Apple has approved and loaded in at fabrication. This is great but if you’re OK trading off flexibility for less safety you get generally programmable TEEs. You can verify most things but they get exploited … a lot. ZK, while computationally more expensive, gives you the ability to generate verifiable computations on any device: freedom !

Why Verifiable ML and Statistics Matter Now

A worthy question then is why focus on verifiable ML and not arbitrary computations? Often with specialization we get performance advantages relative to other systems and better developer experience. A Swiss Army knife is more cumbersome and slower to use than a single blade. EZKL is truly dedicated to verifiable ML and statistics and will outperform other more generic ZK frameworks that aren’t as specialized (as seen here).

But this specialization would be without merit if ML and stats were lacking in impact. But this is not the case: these algorithms are being deployed in extremely high stakes environments, where even a single model inference being intercepted by an adversary, or hardware itself failing and starting to produce bad and corrupted results can have dramatic consequences.

Imagine a world where FaceID models could be tampered with by adversaries. They could potentially authenticate and login as you without even needing access to your face.

Threat Vectors: Hardware Failures and Adversarial Attacks

As machine learning systems scale to handle critical operations, two major threat categories emerge that demand robust verification solutions for deployed ML models:

Hardware Corruption and Silent Failures: At scale, hardware degradation becomes not just possible but inevitable. When running thousands of GPUs or specialized ML accelerators in data centers, subtle hardware errors can occur that silently corrupt model outputs without triggering obvious system failures — something we’ve experienced when building out Lilith. These “soft errors” can result from cosmic ray impacts, manufacturing defects that manifest over time, or components operating at thermal limits and happen all the time in large orgs. Verification mechanisms that can detect when hardware is producing incorrect results become essential safeguards against these invisible threats. In fact whenever our hardware on Lilith starts to malfunction or starts to corrupt data we immediately detect it because the proofs we generate fail to verify.

Inference-Time Attacks: Even with properly functioning hardware and correctly trained models, adversaries can target the inference process itself:

- At the input stage, attackers can craft adversarial examples—inputs specifically designed to fool ML systems while appearing normal to humans. A subtly modified stop sign might be misclassified as a yield sign by an autonomous vehicle, with potentially fatal consequences. If the data is attested to (say by existing on-chain), this can be resolved using ZK, but otherwise this remains an open problem to solve.

- During inference execution, side-channel attacks can extract sensitive information by monitoring timing, power consumption, or electromagnetic emissions from the hardware running the model. This allows attackers to steal proprietary model parameters or even extract private data from the inputs. ZK can definitely help mitigate this by masking intermediate computations BUT even then this is still an open problem.

- At the output stage, man-in-the-middle attacks can intercept and modify model decisions before they reach their intended destinations. In a high-frequency trading system, intercepting and delaying a “buy” signal by even milliseconds could allow an attacker to front-run trades and manipulate markets. This is where TEEs and ZK can really shine.

These threats are particularly concerning because they can bypass traditional security measures that focus on protecting model integrity before deployment. Comprehensive verification solutions must address the entire lifecycle of model operation, from training through deployment to each individual inference. ZK can begin to verify broad parts of this pipeline and help protect against inference time attacks and hardware failure.

Where are ML models most vulnerable ?

- Financial Infrastructure: Major financial institutions now rely on complex statistical models for everything from fraud detection to credit approval to algorithmic trading. These systems collectively influence trillions of dollars in capital flows daily. When JPMorgan Chase implements ML systems to detect fraudulent transactions, they need absolute certainty that the models that are executing are the ones that were originally deployed.

- Critical Infrastructure Security: Power grids, water systems, and transportation networks increasingly rely on AI for monitoring and threat detection. The Israeli electric grid uses ML systems to identify potential cyber attacks by analyzing patterns in network traffic. These systems form the last line of defense against potentially catastrophic attacks. Computational integrity becomes a matter of national security and public safety.

- Decentralized Finance Protocols: In DeFi, automated market makers, lending platforms, and derivatives exchanges collectively manage hundreds of billions in assets through algorithmic systems. Aave and Compound, two major lending protocols, use complex statistical models to determine safe loan-to-value ratios and liquidation thresholds. The on-chain nature of these systems means verification isn’t just about correctness—it’s about maintaining the trust assumptions that allow these permissionless systems to function at all. Unlike traditional finance, there are no central authorities to roll back transactions or compensate victims if models behave unexpectedly: failures are permanent.

- Authentication systems: as noted above with Face ID, authentication are enticing targets for adversaries.

Looking Ahead

The evolution of verification has progressed from specialized hardware environments like Apple’s Secure Enclave to more flexible but still hardware-dependent solutions like Intel SGX, and now toward hardware-independent approaches using zero-knowledge proofs.

At EZKL we are committed to developing a system as secure and flexible as possible ensuring that developers demanding the highest guarantees of verifiability are satisfied. We’re here to make deployed ML systems as secure and as privacy preserving as possible on any device.