The blockchain industry is experiencing unprecedented excitement around AI integration, with major funding flowing into “Crypto x AI” projects. However, this enthusiasm should be tempered and we should proceed with caution. Without cryptographic proofs of algorithmic integrity, blockchain systems risk becoming mere databases of unverified external computation.

The Problem

AI and ML systems have demonstrated fundamental vulnerabilities: they can behave unpredictably, be manipulated through adversarial attacks, and produce unreliable outputs. This unpredictability and vulnerability compounds as the size of models grows [1].

Coupled with blockchains, where errors and exploits can’t always be recovered from, the flaws and weaknesses of AI models become more consequential and thus more pressing to address.

Naively having an algorithm interact with a blockchain, compromises the blockchain’s fundamental value proposition of verifiable execution. This renders any system constructed this way unsafe for downstream users.

Consider a DeFi protocol relying on an ML model for risk assessment. Running this model off-chain means users must trust that: (1) the claimed model matches what’s actually being executed, (2) the model hasn’t been tampered with, (3) someone shows up to run the model, and (4) the inputs and computation process are those intended. However, a motivated attacker could:

- Deploy a compromised model that underperforms by way of training data poisoning [2],

- Manipulate input data before it reaches the model [3],

- Execute adversarial attacks on inputs to force model misprediction [3][4],

- Compromise and tamper with model weights during execution,

- Intercept and modify outputs before on-chain submission, or

- Cause the model not to be run at all, or delay the update.

The liquidity environment in crypto means that any exploit gets pwned to the max, particularly with flash loans. Introducing additional unverified off-chain computations will only increase the windows and methods of attack 1000 fold. Unfortunately most crypto x AI projects today leave these windows wide open, which is a blocker for putting AI in charge of anything that matters.

Solutions

Proof of Inference

Zero-knowledge proofs and verifiable computation offer solutions to this problem.

Using systems like zk-SNARKs, an algorithm’s execution can be proven and then verified on-chain while keeping some parts of the implementation private. This enables verification that:

- The exact specified algorithm was used

- All inputs were processed correctly

- Results weren’t manipulated and altered.

Implementation approaches include:

- Arithmetic circuits for representing algorithmic logic

- Recursive SNARKs (or STARKs) for complex computational proofs

- Verifiable delay functions for time-bound execution

Resulting proofs can be verified on-chain with a corresponding verifier contract. In addition, if anyone can compute and prove the update trustlessly, there is no reliance on a human analyst and no one can stop the protocol’s forward motion.

Attesting to inputs

If the algorithm’s input data are also on-chain values, they can be attested to during proof generation, preventing tampering of inputs to generate adversarial attacks (which exist in ALL models [5]).

Proof of performance

Before rolling out a model, the deployer can prove that the model achieves a certain amount of performance on a test-set. This isn’t a perfect solution though; as the person deploying can always train on this data if it is public; and thus these eval and test sets that are proven over need to be kept private.

This has been a challenge to implement, even in LLM space, where evals seems to be leaking all the time [6].

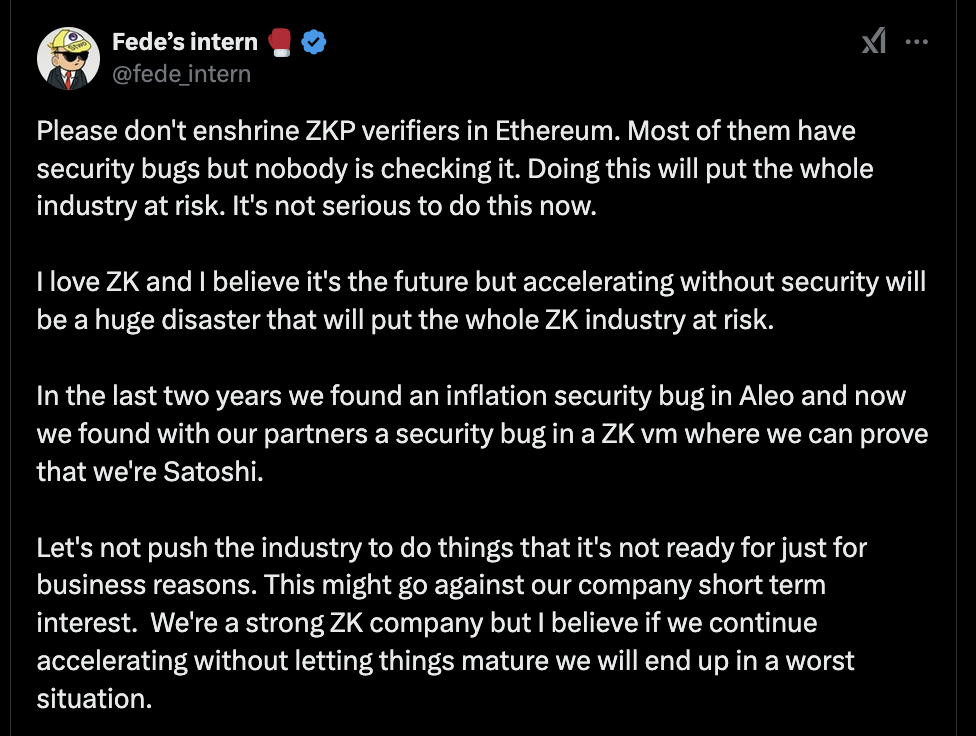

Some caveats

ZK systems aren’t bulletproof. Smart contract verifiers can contain implementation bugs, trusted setup ceremonies can be compromised, and the mathematical foundations of certain proving systems might have undiscovered vulnerabilities. Moving computation on-chain doesn’t automatically guarantee security - the verification system itself must be rigorously audited and tested.

The situation with TEEs, often pushed as a silver bullet solution to verifiability, is much worse with regular exploits, successful key extraction attacks, and tremendous trust in single vendors. We hear concerns from our users regarding the inadequacy of the security provided by TEEs again and again, and many have begun to view it as a purely economic security model with a falling attack cost.

But as ZK systems refine and get audited over and over again, they’re going to become the bedrock (if they aren’t already) for developers that prioritize the security of their users.

Conclusion

The overhead of on-chain verification is justified by the security guarantees it provides. If algorithmic systems gain more control over digital assets and smart contract execution, their integration with blockchain must preserve core properties of verifiability. Without cryptographic proofs of algorithmic integrity, blockchain systems risk becoming mere databases of unverified external computation; basically absolutely useless garbage.

Advancing zero-knowledge tech and efficient proof systems make this verification increasingly practical. These maintain blockchain’s security while unleashing new algorithmic innovation and enable new things that weren’t possible with smart contracts alone. All this whilst maintaining blockchain’s core promise: trustless and verifiable computation for allllll.

References

[1] https://arxiv.org/pdf/2209.15259

[2] https://arxiv.org/pdf/2412.08969

[3] https://arxiv.org/pdf/2112.02797

[4] https://openai.com/index/trading-inference-time-compute-for-adversarial-robustness/